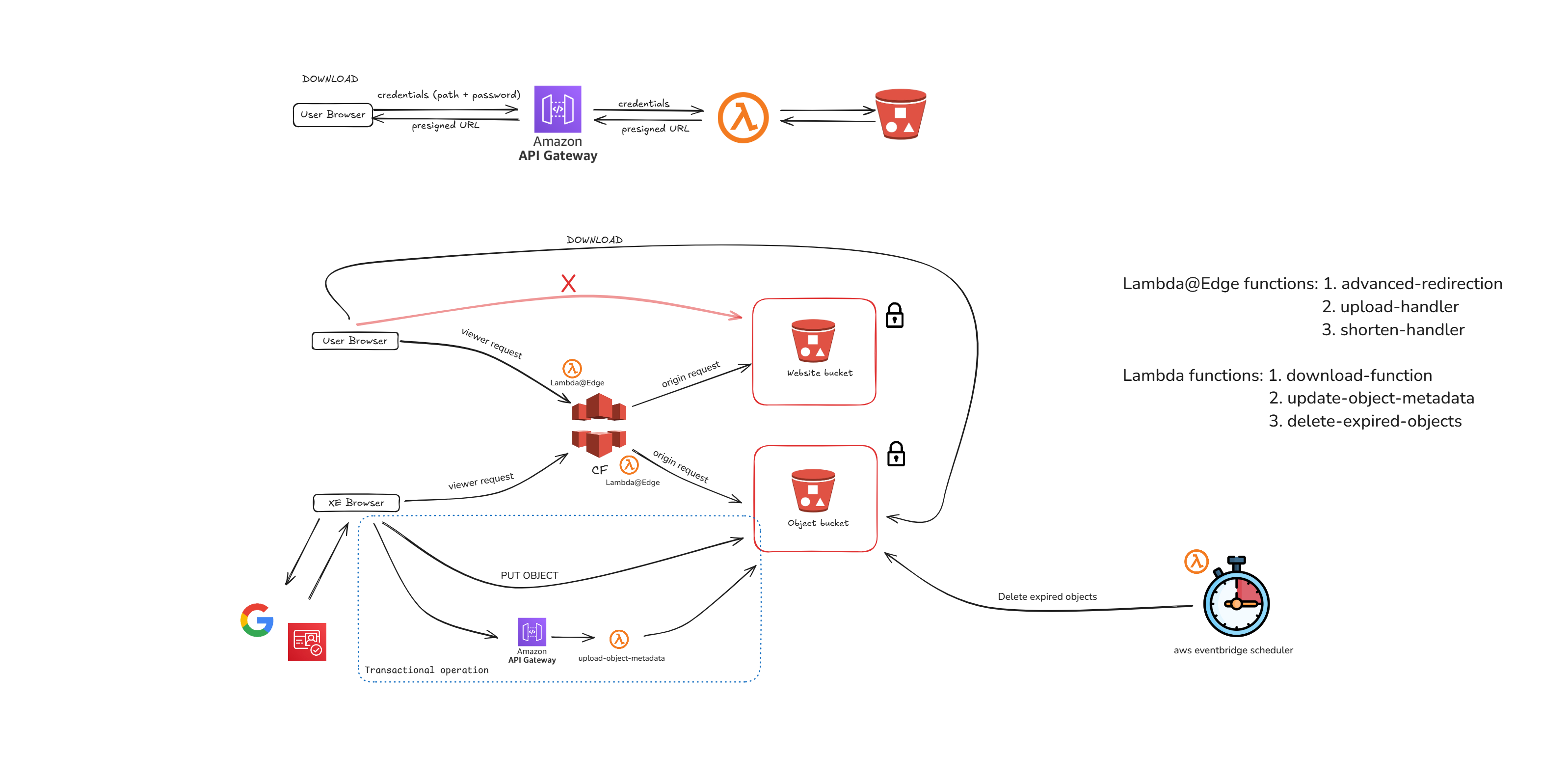

Project Architecture and Service Design

S3 Buckets

This solution uses two isolated S3 buckets, with public access disabled for both. Access is strictly controlled through IAM roles with the appropriate permissions.

First Bucket: Object Storage

The first bucket is used for storing both uploaded files and shortened objects. Each object type has specific metadata associated with it:

Uploaded Files: These will have metadata such as

delete-path,end-date,password,original-filename.Shortened Objects: Represented as 0B objects (without any content), these objects will only include metadata like

delete-path,end-date, andredirection(the URL where users will be redirected when they request the object).

Both types of objects will also store information about the owner—the person who created the object.

The object key (i.e., the file name in the S3 bucket) is used as the path for accessing the object. This approach allows direct access via the key. Storing the access path in metadata would require iteration through all objects in the bucket to find a match, which is less efficient.

For example, the original file name is not used as the object key; instead, it is stored as metadata under original-filename.

This bucket is directly accessed by authorized users through the frontend or by Lambda functions, including:

advanced-redirection: A Lambda@Edge function that reads metadata and performs redirection or manual deletion when triggered by a delete action.update-file-metadata: A function that generates sensitive information and updates objects with new metadata.delete-expired-objects: A function that deletes expired objects based on theirend-datemetadata.download-function: A function that checks if the provided password matches the object’s password and, if successful, returns a pre-signed URL for downloading the object.

Each Lambda function will be explained in more detail in the "Lambda Functions" section.

Second Bucket: Static Content for CloudFront

The second bucket is used to store static content for the application, with three main folders: download, shorten, and upload. Each folder contains the corresponding HTML, CSS, and JS files. This bucket is set as the origin for a CloudFront distribution, which serves the content.

Access to this bucket is restricted and can only occur through CloudFront. This is achieved by setting origin access control in CloudFront and adjusting the bucket's access policies.

CloudFront

We use CloudFront as a content delivery network (CDN) for our project. As mentioned earlier, the origin of this CDN is an S3 bucket that hosts webpages, and the REST API is used with origin access control.

We also defined a custom header that sends the name of the objects S3 bucket to the Lambda@Edge function (advanced-redirection), which is positioned at the origin request stage. This approach was used because we did not want to hardcode the value of the objects bucket in the Lambda function code. Since Lambda@Edge does not support environment variables, we implemented this solution as described in the blog: AWS Lambda@Edge Node.js "Environment variables are not supported.".

There are four cache behaviors defined in CloudFront, but they could all be merged into one since we have only one origin. This was the initial approach, and it hasn't been changed since. Caching is disabled in all behaviors. The /shorten/* and /upload/* behaviors examine the request and, if necessary, append index.html to the request (e.g., domain_name/upload/).

The advanced-redirection function performs various tasks, which will be explained in a later section. The upload and shorten Lambda@Edge functions are positioned at the viewer request stage, while advanced-redirection is at the origin request stage to handle the custom header we need to send to it.

Authentication

We use an Amazon Cognito Identity Pool to exchange a Google token (provided by our identity provider, accounts.google.com) for AWS credentials. These credentials grant users a role that allows actions such as PutObject in the objects bucket.

The Google API project is configured as internal for Ximedes, which means only Google accounts within our company workspace are accepted.

In the shorten and upload webpage scripts, the onSignIn and credentialsExchange functions handle the exchange from googleToken to AWS credentials.

Lambda functions

Lambda@Edge

Overview of Functions

upload-handler: Intercepts requests to the

/upload/*path at the viewer request position. This function typically forwards requests without modification; however, if the requested path is/upload/, it appendsindex.htmlto the path, resulting in/upload/index.html.shorten-handler: Similar to the

upload-handler, it intercepts requests to the/shorten/*path at the viewer request position. It forwards requests unmodified, except when the requested path is/shorten/, in which case it appendsindex.html, making it/shorten/index.html.advanced-redirection: This function performs multiple tasks, detailed below:

Basic Redirection: Handles simple redirection scenarios. For example:

Root requests (

/and/index.html) are redirected to the main Ximedes website (https://www.ximedes.com).Requests to

/uploadand/upload/are directed to/upload/index.html.Requests to

/shortenand/shorten/are redirected to/shorten/index.html.

Object Deletion: Manages requests where the path begins with

dl-, indicating a delete request. The expected format isdl-{8-character delete secret}-{object key}. If the requested object exists and the delete secret matches the one stored in the metadata, the object is deleted from the S3 bucket. Otherwise, an error is returned.Redirection Objects: For requests matching a redirection object name (determined by fetching the object from the S3 bucket and confirming a size of 0B), it redirects the user to the URL defined in the object’s metadata.

Download Page: If the object key in the S3 objects bucket matches the requested path and if that object has content (i.e., its size is greater than 0B), it is treated as an uploaded file. The function then redirects the user to the download page with the object key in the query string (e.g.,

/download/index.html?path=pandafor a request to/panda). From there, the user can enter a password and download the object.Error Handling: If none of the above cases apply, an error is returned, indicating that the requested resource could not be found.

The

advanced-redirectionfunction is positioned at the origin request stage because, in order to function properly, it requires the name of the S3 bucket where objects are stored. To provide this information, we set up a custom origin header in the CloudFront distribution, as Lambda@Edge does not support environment variables. This approach, detailed in this StackOverflow discussion, allows the function to receive the required S3 bucket name.

Lambda

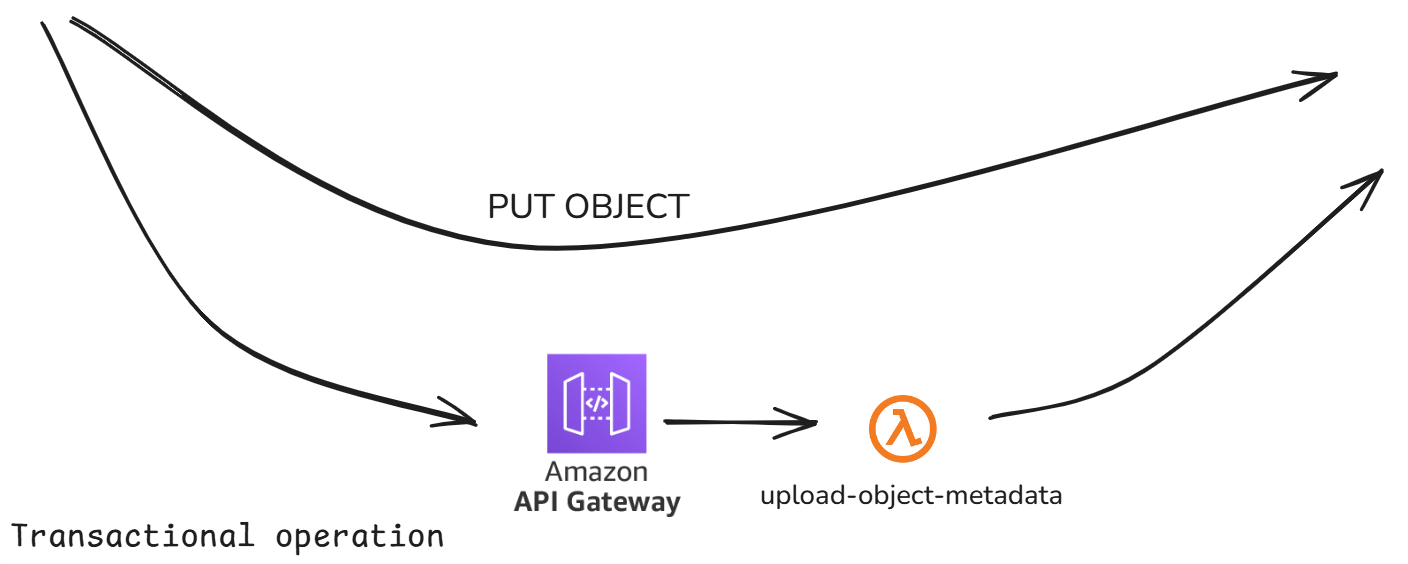

update-file-metadata: This Lambda function is necessary and can be seen in the diagram where a Ximedes employee's (XE) browser connects to the S3 object bucket.

We access this Lambda function via API Gateway from the front-end JavaScript in both the upload and URL shortening scenarios. It was added to enhance security and avoid generating sensitive metadata in the front-end scripts.

Here’s how it works: First, we need to create an initial object without any metadata, which means generating an object key (unless the user has provided their own) and the Body (content) in the case of a file upload. In theory, the only parameter that can be generated in the front-end JavaScript is the object key. However, it is expected that users will usually specify this themselves. Future implementations may improve the auto-generated object names. For example, in the file upload case, the object name will be based on the uploaded file's name, and in the URL shortening case, it will be based on the original URL where the redirection should occur.

Once the

PutObjectoperation executes successfully, we make a call to the API Gateway endpoint, triggering this Lambda function. We send the metadata in JSON format, based on the user's specifications or empty values when the system should generate them.The JSON format differs depending on whether it's an upload or shortening scenario, and we distinguish between the two by checking if the

originalUrlproperty is present in the JSON (which is mandatory for URL shortening).If the

originalUrlproperty exists, we handle the shortening scenario by generating a delete path and performing aCopyObjectCommandwith the updated metadata. Since object metadata cannot be modified after the object is created, we essentially recreate the object with the same content, but with the added metadata.If the

originalUrlproperty is absent, we are handling the upload scenario. In this case, we generate the delete path, a password (if the user hasn’t provided their own), set theendDateto 4 weeks in the future (if the user hasn’t specified one), and add theoriginal-filename. The password is also hashed with Bcrypt before being written in the metadata.

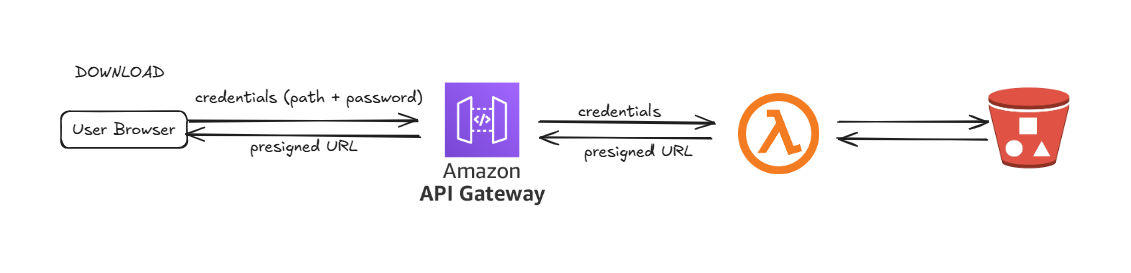

download-function: This Lambda function is triggered via API Gateway when a download request is made.

The function extracts the object key and the provided password from the incoming JSON request. It then checks if the object with the specified key contains the correct password metadata that matches the provided password.

If the passwords match, a presigned URL for the object is generated, allowing secure access. The

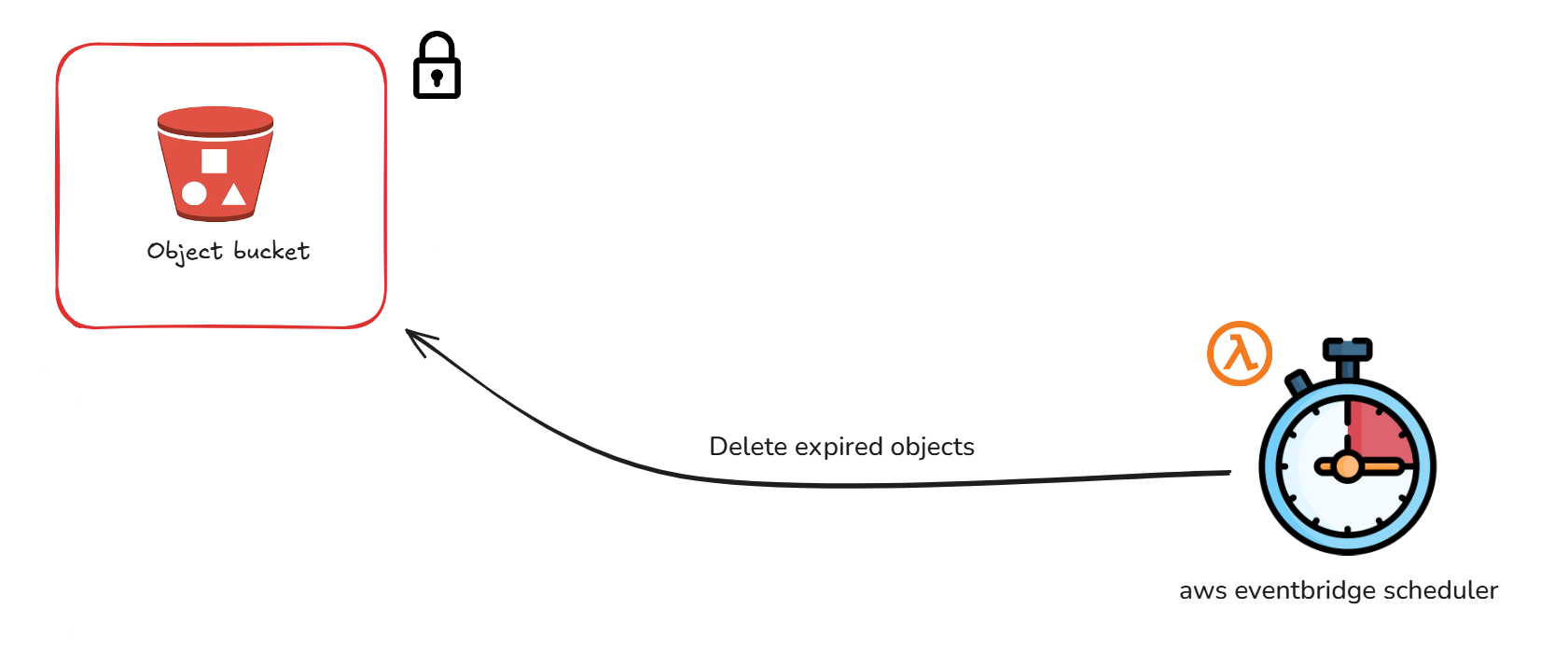

ResponseContentDispositionheader is set so that when the download process starts, the original filename (stored in the object metadata) replaces the S3 object key in the download prompt.delete-expired-objects: This Lambda function automatically deletes expired objects.

Triggered daily by an AWS EventBridge Schedule at midnight (Europe/Amsterdam timezone), the function iterates through all objects in the S3 bucket and deletes those with an

endDateearlier than the current date.